|

DOI: 10.7256/2585-7797.2023.1.40387

EDN: OCFBSP

Received:

06-04-2023

Published:

25-04-2023

Abstract:

Our article is presenting an attempt to apply NLP methods to optimize the process of text recognition (in case of historical sources). Any researcher who decides to use scanned text recognition tools will face a number of limitations of the pipeline (sequence of recognition operations) accuracy. Even the most qualitatively trained models can give a significant error due to the unsatisfactory state of the source that has come down to us: cuts, bends, blots, erased letters - all these interfere with high-quality recognition. Our assumption is to use a predetermined set of words marking the presence of a study topic with Fuzzy sets module from the SpaCy to restore words that were recognized with mistakes. To check the quality of the text recovery procedure on a sample of 50 issues of the newspaper, we calculated estimates of the number of words that would not be included in the semantic analysis due to incorrect recognition. All metrics were also calculated using fuzzy set patterns. It turned out that approximately 119.6 words (mean for 50 issues) contain misprints associated with incorrect recognition. Using fuzzy set algorithms, we managed to restore these words and include them in semantic analysis.

Keywords:

recognition of historical sources, OCR correction, fuzzy sets, NLP (natural language processing), text preprocessing, Birzhevye vedomosti, Levenshtein distance, content analysis, topic modeling, historical newspapers

This article is automatically translated.

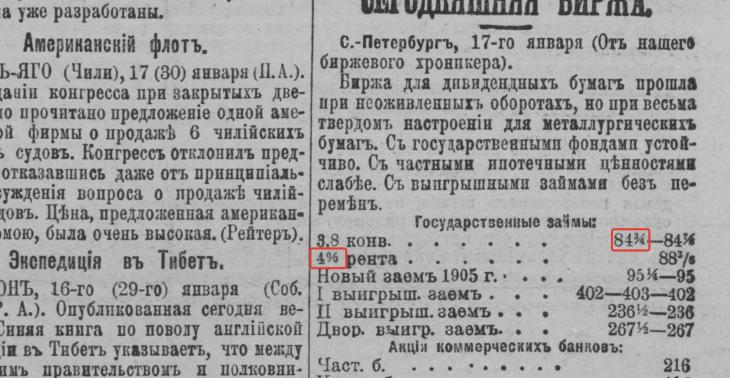

At the present stage, social and humanitarian sciences are increasingly turning to methods of machine text processing. For specialists in the field of historical informatics, the tasks of network analysis of semantic categories of newspaper texts have become classic [1]; determining authorship by stable patterns of style formalized in grammatical categories of text [2]; semantic comparison of text corpora by content analysis methods [3]. New directions are emerging: exploratory analysis of historical sources through thematic modeling [4], the use of artificial intelligence in the tasks of restoring damaged sources [5]. All these areas are united by the requirement for the quality of digitized and recognized text. Today, it seems superfluous to discuss the question of the degree of influence of the quality of text preparation on the final results of models of any level of complexity [6]. The world community of researchers is so clearly aware of the need to create the most accurate and flexible text recognition tools that this field can be called almost the only auxiliary discipline of historical science for which the development of AI and NLP (natural language processing) methods has led to a significant increase in the number of publications. Over the past three years alone, more than 40 publications devoted to the problems of translating historical sources into machine-readable form can be found on the Paperswithcode website [7]. And we are talking only about publications whose authors have left all their software developments in the public domain to the community. Our article is devoted to an attempt to apply modern NLP methods to optimize the text recognition process of historical sources. Today there are several popular platforms for this procedure. In this context, it is certainly worth noting the European Transcribus project, which explicitly declares the recognition of historical texts as its goal. The developers consider the presence of a large number of pre-trained language models for different eras (both for handwritten and printed texts) to be a key advantage of their platform. Transcribus is a platform for researchers that requires annual contributions and grants the right to participate in the project. We are talking about access to a huge library of user templates created using the built-in tools of the platform. Five such models are available for the Russian language: two for the printed type of the XVIII century and three for handwritten texts of the late XIX–early XX century. [8]. Another popular tool for recognizing historical texts is the ABBY FineReader program. The standard library of FineReader includes pre–revolutionary Russian, which can be extremely convenient for researchers working with newspaper or other printed texts of the XIX - early XX century. XX century . This program provides the ability to create custom templates that add new symbols to the FR libraries, trained according to the instructions of the researcher. In the case of newspaper materials of the early XX century, this function can significantly improve the quality of recognition of complex characters that have no analogues in standard dictionaries: for example, specific fractions or abbreviations.  Fig. 1. Scan of the number of Exchange statements for (17 (30) Jan.) 1905, No. 8618. The symbols are highlighted in red, for which the researcher will probably have to create custom templates in any of the OCR programs Another promising project is OCR–D - a large joint development of the Python module by German experts in the field of Digital Humanities and the API system for recognizing Gothic font of the XVI –XVIII centuries attached to it [9]. This project is interesting for the stated purpose of creating a set of publicly available packages containing recognition functions optimized for historical sources, as well as pre-trained models, the key feature of which will be the emphasis on high-quality segmentation of text on a digitized page. Today, the project is at the final stage of development, already in 2024, a large-scale application of the technology is planned to translate the collection of the library of Halle–Wittenberg University - a key partner of OCR-D into machine-readable form. In Russia, a similar project is being implemented, for example, at the Russian Academy of National Economy and Public Administration (RANEPA) by a team led by R.B. Konchakov [10]. The project involves the recognition of a collection of handwritten sheets of governor's reports, followed by the creation of a universal application for digitizing handwritten historical sources. And although the possibility of creating such a universal application is debatable today, this initiative is certainly a step towards further digitalization of historical research. * * * This brief overview precedes our research. The fact is that any researcher who decides to use the recognition tools of scanned texts will face a number of limitations on the accuracy of the pipeline (the sequence of recognition operations). And even the most well–trained models can give a significant error, at least because of the unsatisfactory condition of the source that has reached us: cuts, bends, blotches, erased letters - all this interferes with qualitative recognition. Because of this, trying to recognize the word "shareholder", the researcher can get "ak_.oner", "akpioner", "aktsnoier", etc. This article offers one of the solutions to the indicated problem. It is worth noting that the proposed technique is suitable only if the subsequent text processing preceding formalization will include lemmatization and filtering by the TF-IDF criterion (or a similar metric for assessing the significance of a word in the text and corpus). (see Appendix 1) Most of the historical research involving computerized text analysis assumes either a given or a desired set of categories reflecting the semantic core of the corpus of texts being studied. In the case of content analysis, such a category system is set by the researcher; in the case of thematic modeling, the system of topics and words reflecting these topics is determined by machine, without the participation of the researcher. Both methods involve a quantitative assessment of the presence in the texts of those words that mark the topics of interest to the researcher. In a number of studies, such a set was initially set at the preparatory stage [11].

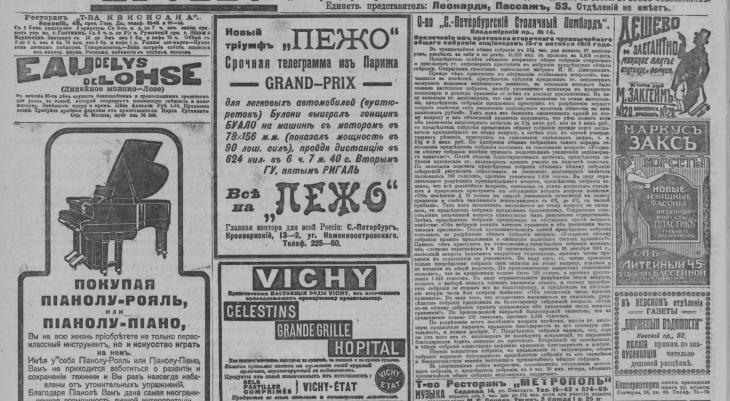

Our research, for which the optimization of text recognition of historical sources described in the article was developed, is devoted to the study of the profitability of securities on the St. Petersburg Stock Exchange at the beginning of the XX century from the perspective of behavioral finance. We are interested in the principles of valuation of public companies – how acceptable or insufficient levels of capitalization were determined, whether it is possible to identify industry specifics; issues of payment of coupons and dividends – what levels of profitability were considered sufficient and whether we can talk about the presence of any benchmark (standard paper with a risk-free rate) for the business environment of the early XX century. The newspaper "Birzhevye Vedomosti" was chosen as one of the main sources, in the daily issues of which there was an exchange column, where the chronicler's comment was printed, which described the mood of the bidders and often provided a detailed analysis of the current situation in the economy of the Russian Empire. Analytical notes on the profitability of securities are often found in the columns of the "Exchange Statements"; at what percentage the next issue of government debt securities is placed; at what level relative to the nominal value these securities are traded; whether the proposed percentage corresponds to the current statistics of the money market and how to explain the exchange rate dynamics of recent days. For the study, it was decided to collect a collection of such notes in order to identify stable analytical patterns characteristic of the stock exchange press in matters related to the profitability of securities. We used the materials of the digitized set of "Stock Exchange Statements" from the website of the Russian National Library (447 scanned issues for 1905 and 1913, morning and evening issues).

Fig. 2. Scan of the issue of the "Exchange Statements" for (25 Oct. (7 Nov.)) 1913, No. 13822 (25 Oct. (7 Nov.)). We are interested in the column "Extract from the minutes of the secondary extraordinary meeting of shareholders".

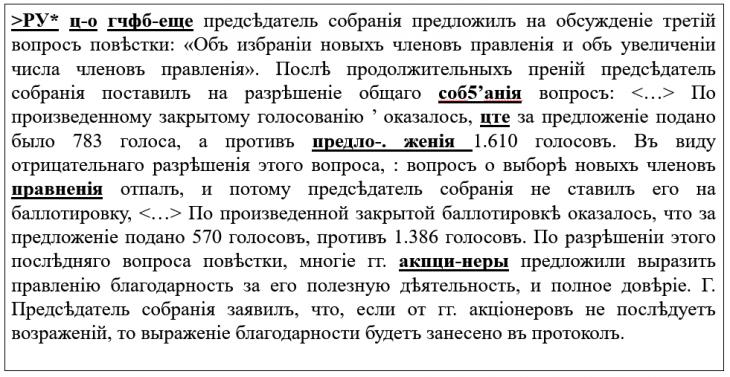

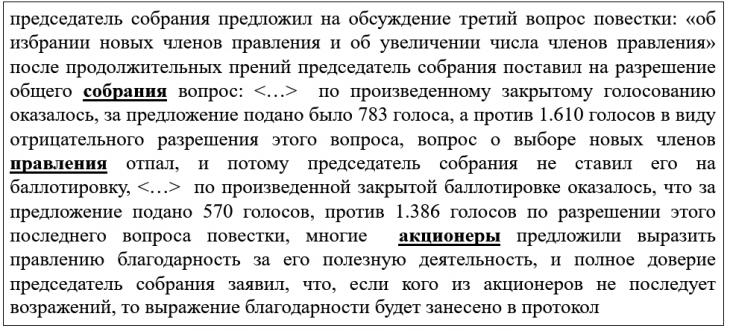

Fig. 3. Results of recognition of newspaper text. Figure 3 highlights examples of poorly recognized words that turn out to be important for the implementation of a formalized text analysis within the framework of our study. Also on the first line we can observe "garbage" sequences of characters recognized incorrectly. Our assumption was that, using a predefined set of words marking the presence of the topic of interest to us (securities yield), using the Fuzzy sets module from the NLP library SpaCy, we will be able to restore the templates of those words that, following the recognition procedure, were recognized with errors [12]. In order to use the full potential of the trained models of the Russian language in SpaCy (including for subsequent lemmatization and binarization), we translated the recognized text from pre-revolutionary orthography into modern one by using the Russpelling library for the Python programming language developed by I. Bern and D. Bernbaum as part of the Sofia University project on the application of NLP methods in the field of Slavic philology [13]. It is also worth noting here that the SpaCy library used below is available for both Python and the popular R programming language in the social sciences and humanities [14]. Then all the words in the recognized sources were checked for spelling through the pyenchant module based on the publicly available dictionary of the Russian language from LibreOffice. We assumed that all incorrectly recognized words would not pass this spelling test. Keeping all the words in their places, we selected all the words that failed the test in a separate collection. To restore the original word using the Levenshtein distance fuzzy set method (all fuzzy comparison operations in SpaCy are implemented through this metric, see Appendix 1), we needed to set a set of templates. So, we have chosen the following words that mark the topic of interest to us: Table 1. List of Fuzzy-match patterns and their corresponding word forms.Index | Pattern | Forms | 0 | | | bond | ['bond', 'bond', 'bond', 'bond', 'bond', 'bond', 'bond', 'bond', 'bond', 'bond'] | | 1 | percent | ['percent', 'percent', 'percent', 'percent', 'percent', 'percent', 'percent', 'percent', 'percent', 'percent'] | | 2 | percent | ['percent'] | | 3 | loan | ['loan', 'loan', 'loans', 'loans', 'loan', 'loan', 'loans', 'loan', 'loans', 'loans', 'loans', 'loans'] | | 4 | state | ['state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state', 'state'] | | 5 | funds | ['funds', 'fund', 'fund', 'funds', 'funds', 'fund', 'funds', 'funds', 'funds', 'fund', 'fund'] | | 6 | paper | ['paper', 'paper', 'paper', 'paper', 'paper', 'paper', 'paper', 'paper', 'paper', 'paper', 'paper'] | | 7 | discount | ['discount', 'discount', 'discount', 'discount', 'discount', 'discount', 'discount', 'discount', 'discount', 'discount', 'discount'] | | 8 | coupon | ['coupons', 'coupon', 'coupon', 'coupons', 'coupon', 'coupons', 'coupon', 'coupon', 'coupons', 'coupons'] | | 9 | rent | ['rent', 'rent', 'rent', 'rent', 'rent', 'rent', 'rent', 'rent', 'rent', 'rent', 'rent', 'rent'] | | 10 | mortgage | ['mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage', 'mortgage'] | | 11 |

commitment | ['obligation', 'obligations', 'obligation', 'obligations', 'obligation', 'obligation', 'obligations', 'obligations', 'obligations'] | | 12 | profitability | ['yield', 'yields', 'yields', 'yields', 'yields', 'yields', 'yields'] | | 13 | quote | ['quotes', 'quotes', 'quotes', 'quotes', 'quotes', 'quotes', 'quotes', 'quotes', 'quotes', 'quotes', 'quotes'] | | 14 | issue | ['emissions', 'emissions', 'emissions', 'emissions', 'emissions', 'emissions', 'emissions', 'emissions', 'emissions', 'emissions'] | | 15 | balance | ['balance sheet', 'balance sheets', 'balance sheets', 'balance sheets', 'balance sheet', 'balance sheets', 'balance sheets', 'balance sheets', 'balance sheets'] | | 16 | russian | Russian russian russian russian russian russian russian russian russian russianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussianrussian russian russian russian russian russian russian russian russianrussian | | 17 | values | ['Value', 'values', 'values', 'value', 'values', 'values', 'values', 'value'] | | 18 | monetary | ['monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary', 'monetary'] | | 19 | market | ['market', 'market', 'markets', 'markets', 'markets', 'markets', 'markets', 'market', 'markets', 'markets'] | | 20 | dividend | ['dividend', 'dividend', 'dividend', 'dividend', 'dividend', 'dividend', 'dividend', 'dividend', 'dividend', 'dividend'] | | 21 | profit | ['profit', 'profit', 'profit', 'profit', 'profit', 'profit', 'profit'] | | 22 | joint - stock | ['joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock', 'joint stock'] | | 23 | capital | ['capital', 'capitals', 'capital', 'capitals', 'capitals', 'capital', 'capital', 'capital', 'capital', 'capitals', 'capitals'] | | 24 | stock | ['shares', 'shares', 'shares', 'shares', 'shares', 'shares', 'shares', 'shares', 'shares'] | | 25 | limited | ['limited'] | | 26 | order | ['orders', 'orders', 'orders', 'orders', 'orders', 'orders', 'orders', 'orders', 'orders', 'orders', 'orders'] | | 27 | society | ['societies', 'societies', 'societies', 'society', 'society', 'society', 'society', 'societies', 'societies'] | | 28 | meeting | ['meetings', 'meeting', 'meeting', 'meeting', 'meetings', 'meetings', 'meetings', 'meetings', 'meetings'] | | 29 | shareholder | ['shareholders', 'shareholder', 'shareholders', 'shareholders', 'shareholders', 'shareholders', 'shareholders', 'shareholders', 'shareholder', 'shareholder', 'shareholder'] | | 30 | member | ['member', 'member', 'members', 'members', 'members', 'member', 'member', 'members', 'members', 'member'] | | 31 | governance | ['boards', 'boards', 'boards', 'board', 'board', 'board', 'board', 'board', 'boards', 'board'] | At the next stage, all the words that failed the spelling test are compared with the given set of templates. If by the Levenshtein distance (a threshold of 1 or 2 permutations was used) it is impossible to restore the template from the word submitted to the pipeline, it is deleted from the text. Here the question may arise as to how legitimate such a deletion can be. Since our study already uses a TF-IDF filter that weighs the relative importance of words for an individual text and the corpus as a whole, it is reasonable to assume that most of the poorly recognized words would in any case be subject to deletion as background vocabulary. If, according to our fuzzy match test, a given pattern can be restored from a word, we replace the misspelled word in the recognized text with a word pattern determined by the fuzzy–match method. So, the word "shareholder" will be replaced by "shareholder", ".ronds" by "funds", etc.  Fig. 4. The result of the fuzzy sets pipeline operation. The text shown in Figure 3 has been successfully cleaned and restored. In Fig. 4. it is possible to observe the result of operations to transform distorted text, which is now conveniently amenable to semantic analysis by all available means of computerized text processing.

To check the quality of the text recovery procedure on a sample of 50 issues of the newspaper "Birzhevye Vedomosti", we calculated estimates of the number of words that would not be included in the semantic analysis due to incorrect recognition. All metrics were calculated using fuzzy set patterns as well. It turned out that, on average, there are 938.9 words for the number of "Exchange Statements" marking the topic of our research – trading and financial transactions with securities. Of these, an average of 87.2% of words are initially correctly recognized. Approximately 119.6 words (an average of 50 numbers) contain typos associated with incorrect recognition. Thanks to the use of fuzzy set algorithms, we were able to restore these words and include them in semantic analysis. We believe that filling in 12.8% of the words potentially related to the topic under study (listed in Table 1) is a good result that significantly improves the quality of further semantic analysis of the text by computer modeling methods. With the help of the proposed methodology, we were able to process all the newspaper issues included in our collection. For interested readers, we leave a link to access the program code proposed by the author, source materials and recognized texts [15]. *** Thus, it can be stated that the use of fuzzy set methods can significantly improve the quality of text recognition in cases where recognition is not considered as the ultimate goal of a researcher working with documents. Of course, the described technique is a special case for the task of thematic modeling and/or content analysis, when the exclusion of some words of the document is part of a set of operations for text preprocessing. We believe that the use of fuzzy set algorithms in text recognition projects in archival storage will require a fundamentally different pipeline architecture. Not being able to exclude words, and also following the need to observe the grammatical consistency of the text, a researcher intending to apply fuzzy set algorithms will solve a completely different order of problem that requires detailed consideration of the context of each word. However, at the same time, the example presented in this article shows that the field of Fuzzy technologies has significant potential in optimizing text recognition. Appendix 1: Dictionary of Definitions The Levenshtein distance. In information theory, linguistics, and computer science, the Levenshtein distance (LEV) is a string metric for measuring the difference between two sequences. Informally, the Levenshtein distance between two words can be interpreted as the minimum number of one-character edits (inserts, deletions or substitutions) required to replace one word with another. For example, LEV("Krt", "Cat") = 1, since it will take one replacement of "P" with "O". It is named after the Soviet mathematician Vladimir Levenstein, who proposed this metric in 1965. Fuzzy set is a concept introduced by Lotfi Zadeh in 1965 in the article "Fuzzy Sets", in which he expanded the classical concept of a set by assuming that the characteristic function of a set (called the membership function for a fuzzy set by Zadeh) can take any values in the interval (0,1), and not just the values 0 or 1. In our article, we assume, based on fuzzy logic, that although the word "acp-oner" is written incorrectly, the object itself (the word in the text under study) is reduced to the form "shareholder", and the permissible measure of "fuzziness" is the Levenshtein distance equal to 2. TF-IDF (from the English TF — term frequency, IDF — inverse document frequency) is a statistical measure used to assess the importance of a word in the context of a document that is part of a collection of documents or a corpus. The weight of a certain word is proportional to the frequency of use of this word in the document and inversely proportional to the frequency of use of the word in all documents of the collection.

References

1. Soloshchenko N.V. (2021) Large-circulation newspaper "Babaevets" as a source on the history of the food industry of the USSR during the first five-year plan (the experience of content analysis and network analysis) // Historical information science, 2, 1-23. doi: 10.7256/2585-7797.2021.2.35152

2. Kale, Sunil Digamberrao and Rajesh Shardanand Prasad. “A Systematic Review on Author Identification Methods.” Int. J. Rough Sets Data Anal. 4 (2017): 81-91.

3. Garskova I.M. (2018) International scientific conference "Analytical methods and information technologies in historical research: from digitized data to knowledge increment"// Historical information science, 4, 143-151. doi: 10.7256/2585-7797.2018.4.28538

4. Tze-I Yang, A.J.Torget, R.Mihalcea. Topic modeling in historical newspapers. 2011

5. Assael, Y., Sommerschield, T., Shillingford, B. et al. Restoring and attributing ancient texts using deep neural networks. Nature 603, 280–283 (2022).

6. Lopresti, Daniel. (2009). Optical character recognition errors and their effects on natural language processing. IJDAR. 12. 141-151.

7. Papers with Code. URL: https://paperswithcode.com/sota

8. Transkribus. Public models. URL: https://readcoop.eu/transkribus/public-models/

9. OCR-D. URL: https://ocr-d.de/en/

10. Report by R.B. Konchakov (RANEPA) and S.V. Bolovtsov (RANEPA) "Recognition of the reports wrote by Russian Empire province governors: challenges and approaches" was presented at the seminar "Artificial intelligence in historical research: automated recognition of handwritten historical sources", organized by the History and Computer Association and RANEPA on the RANEPA grounds on February 11, 2023 URL:https://ion.ranepa.ru/news/budushchee-istorii-kak-tsifrovye-navyki-otrazhayutsya-na-rabote-istorikov/

11. Soloshchenko N.V. Large-circulation press as a source for studying the formation of the "new man" in the soviet industry of the first Five-Year Plans (2019) // Historical information science, 3, 106-117. doi: 10.7256/2454-0609.2019.3.29991

12. SpaCy. URL: https://spacy.io/

13. Russpelling. URL: https://github.com/ingoboerner/russpelling

14. SpaCyR. URL: https://cran.r-project.org/web/packages/spacyr/vignettes/using_spacyr.html

15. GitHub. URL: https://github.com/iodinesky/Fuzzy-sets-in-historical-sources-OCR

Peer Review

Peer reviewers' evaluations remain confidential and are not disclosed to the public. Only external reviews, authorized for publication by the article's author(s), are made public. Typically, these final reviews are conducted after the manuscript's revision. Adhering to our double-blind review policy, the reviewer's identity is kept confidential.

The list of publisher reviewers can be found here.

The reviewed article refers to an extremely relevant area of research related to the creation of electronic versions and libraries of historical sources by recognizing their scanned images. The article proposes an original technique for optimizing the text recognition process of historical sources based on NLP (natural language processing) methods. The methodology of the article is based on modern ideas about the subject areas of interaction between the humanities (primarily historical) and the possibilities of computer science, mainly with those areas of it that are related to data mining, in particular the implementation of fuzzy set methods. The relevance of the research is determined by the huge and constantly growing interest of the scientific community in creating full-fledged libraries of historical sources that allow dealing directly with their digital versions, making it possible to apply a range of methods and technologies for their meaningful study, in particular content analysis. The scientific novelty of the reviewed article lies in the presentation of a new text recognition technique and the presentation of the results of its use, which clearly indicate that this is a step forward in the direction of further optimization of recognition of historical texts. The structure and content of the article fully corresponds to modern ideas about the presentation of the results of scientific research. After the problem is posed, the main tasks of the work are determined, a brief description of a number of platforms for text recognition of historical sources and the implementation of projects based on them is given. In the next section of the article, using the example of the newspaper "Birzhevye Vedomosti", the actual method of optimizing recognition for correcting poorly recognized words is described. The presented examples of purification and restoration of texts show the usefulness and effectiveness of the technique using fuzzy sets. The article contains successful illustrations that allow you to better reveal its content, and is complemented by a small dictionary of terms used in the presentation of the material. I would especially like to note the language and style of the reviewed text, which, despite the obvious complexity of the content, sets out the main points of the research clearly and is quite accessible not only for text recognition specialists, but also for a wide range of potential readers of the article. The bibliography of the article is small in volume, corresponding to a rather specific research problem. It contains links to the considered platforms for recognizing historical texts, as well as some studies using content analysis. The article does not contain provisions related to controversial issues due to its specific methodological content. Although the presented research is devoted to rather specific methodological issues, it contributes to the complex and extremely important problem of recognizing historical texts. The article does not contain any pronounced flaws, it fully corresponds to the format of the journal and, of course, will find its reader, therefore it is recommended for publication.

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.